Music Transformer

Music Generation with Transformers

From antique wind-chimes to classical period musical dice games, to the aleotoric music of the 20th century, humans have used generative, randomized, and statistical forces to create music. As early as the late 1940s computers have played a role in composition as sound-generators and instruments, as tools and even generating musical material themselves such as the Illiac suite in 1957. Both rule-based and statistical processes have been explored to generate music. With the recent advances in Deep Learning, Neural Networks have been deployed successfully for music generation. Models such as Open AI's Jukebox, Google's AudioLM, or Meta's MusicGen are rapidly advancing the state of convincing audio generation.

For symbolic music, well-known models include FolkRNN, OpenAI's Musenet, and Deepbach

There are a host of related tasks that fall outside of this, such as AI-driven performance of midi files or music transcription. Music generation can take many forms such as generating accompaniments, counterpoint, continuations, variations, and additional layers of instrumentation, or music generation based on text prompts, or other parameters such as genre, key and tempo.

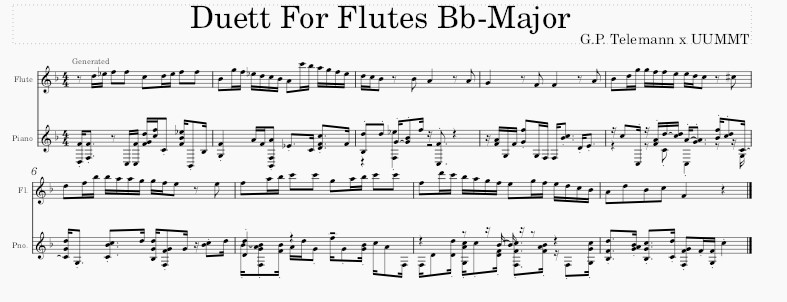

In this project, we set out to explore counterpoint generation in the context of transfer learning. We adopt the general-purpose MMT model for symbolic counterpoint generation in two distinct ways. One involves classical transfer learning, using the MCMA dataset, a dataset of contrapuntal baroque music, to perform a style transfer of the pre-trained model. In our second adoption, the architecture and generation functions are altered to mirror the approach of counterpoint as translation suggested by Nichols et al, but the weights from the MMT model are kept as training basis. The Implementation can be found here.

Collaborators: Connor Macdonald, Casper Smet, Riemer Dijkstra, Theo Bouwman